Epistemological Intervention: Collaborative Ethnography between a human and a machine-learning algorithm

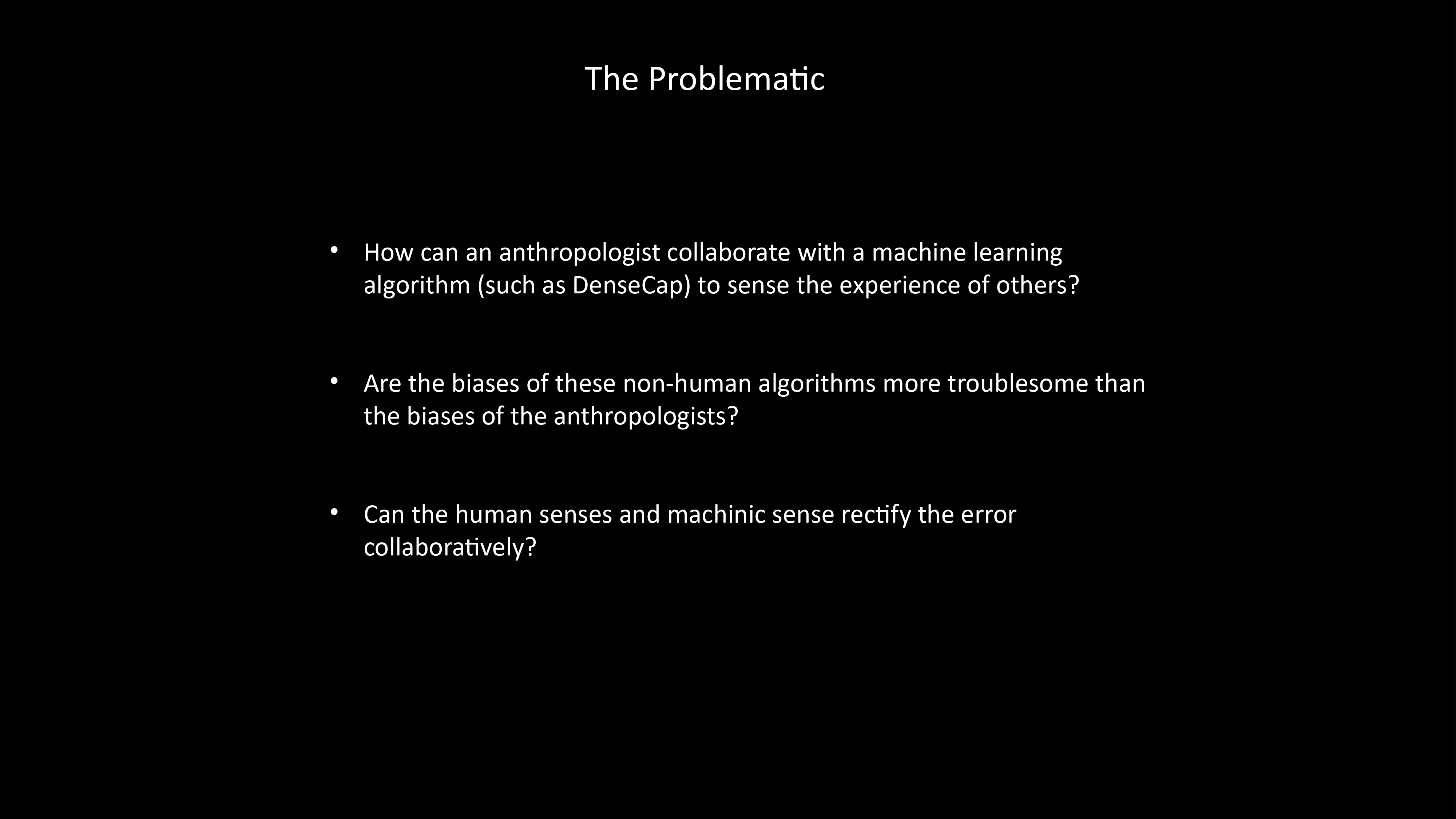

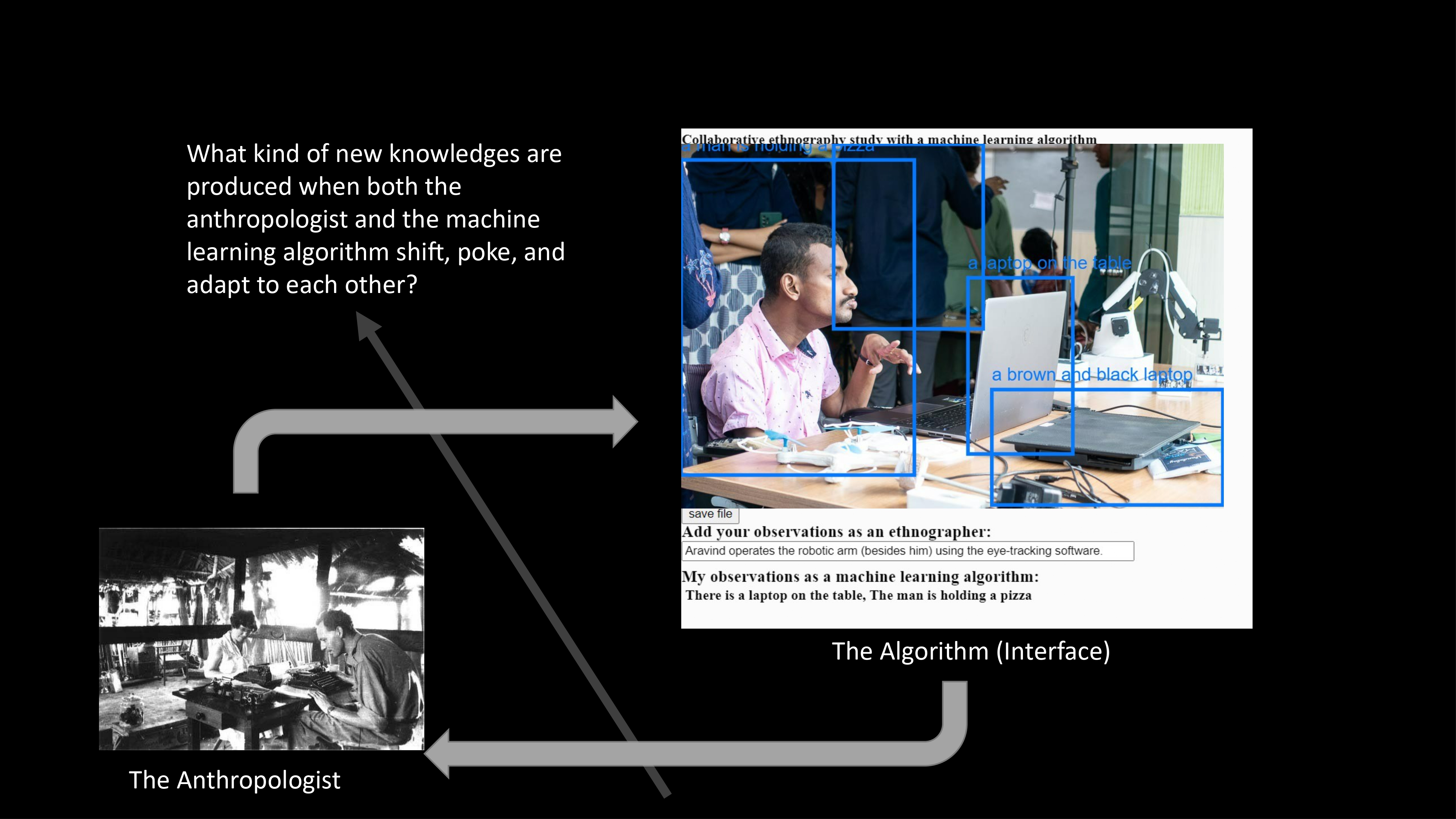

Epistemological Intervention (EI) is a research-creation work that situates itself at the intersection of human-computer interaction, anthropology, and artificial intelligence research - asking the following research question: How can we design interfaces that bring together the human and the non-human to create new forms of epistemologies? EI thus brings in collaboration, a human and a non-human (a machine learning algorithm) to search for new ethnographic methodologies of knowing the world, what Anthropologist Dara Culhane also refers to as “co-creative” knowledge making.

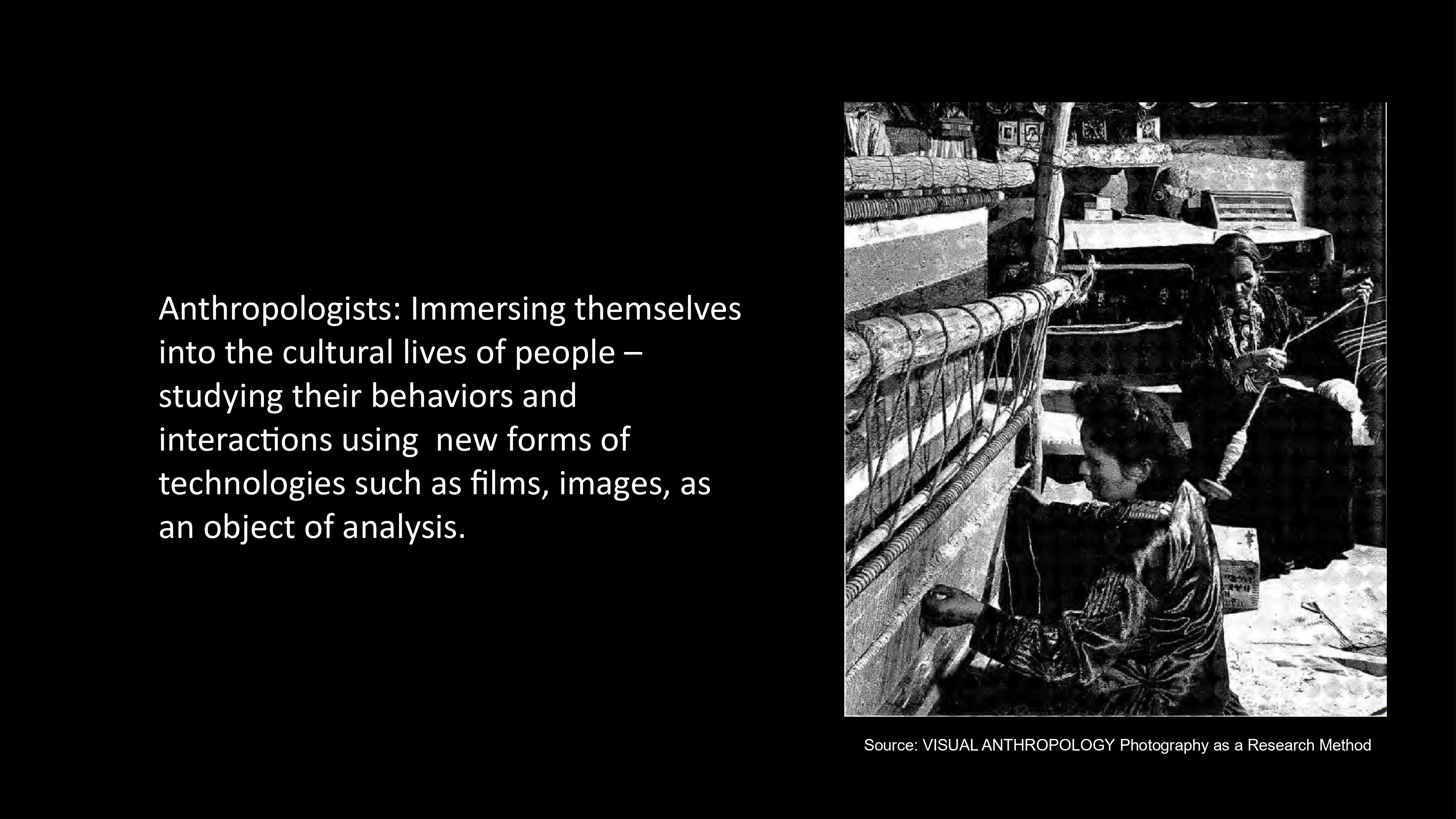

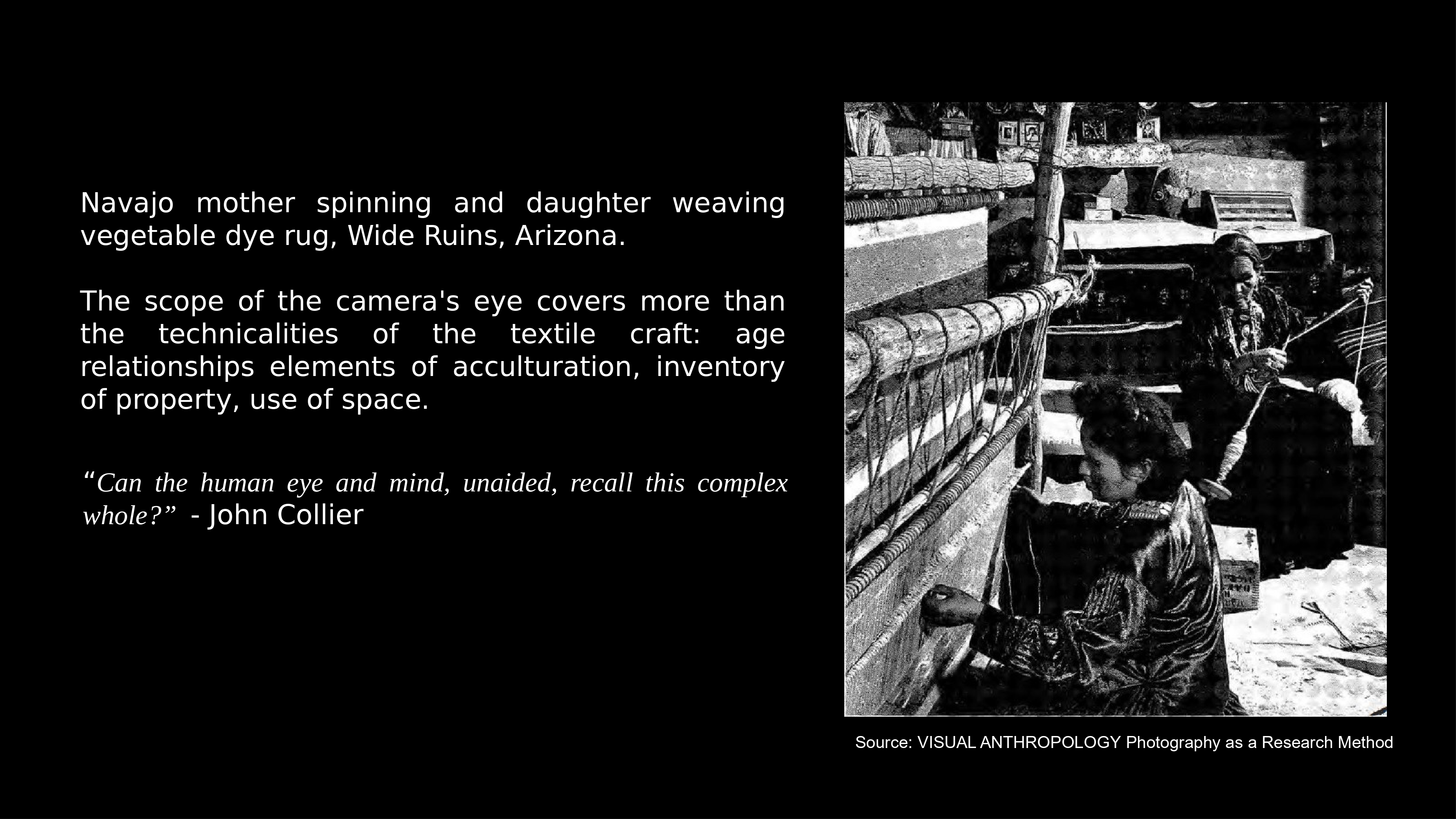

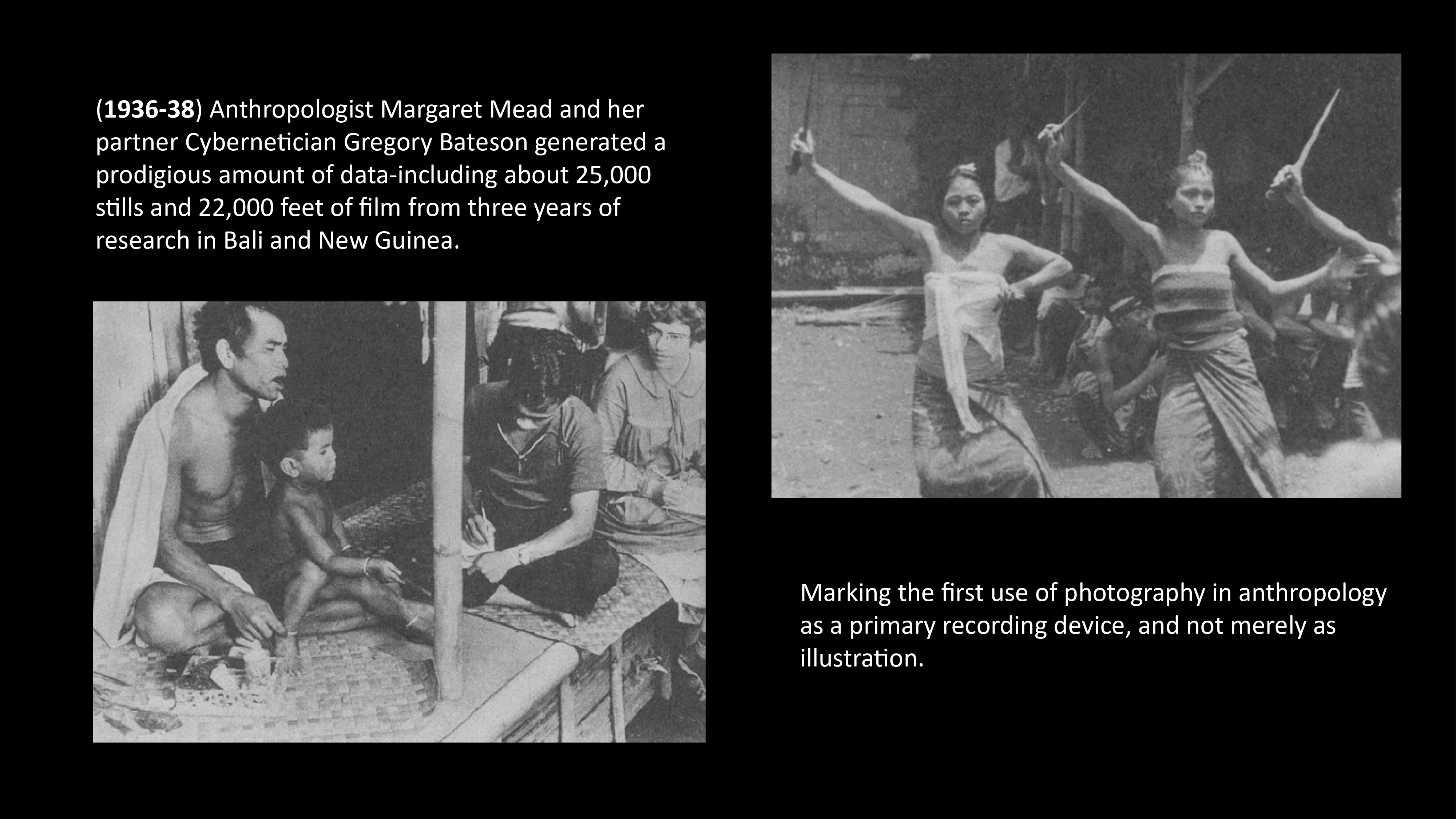

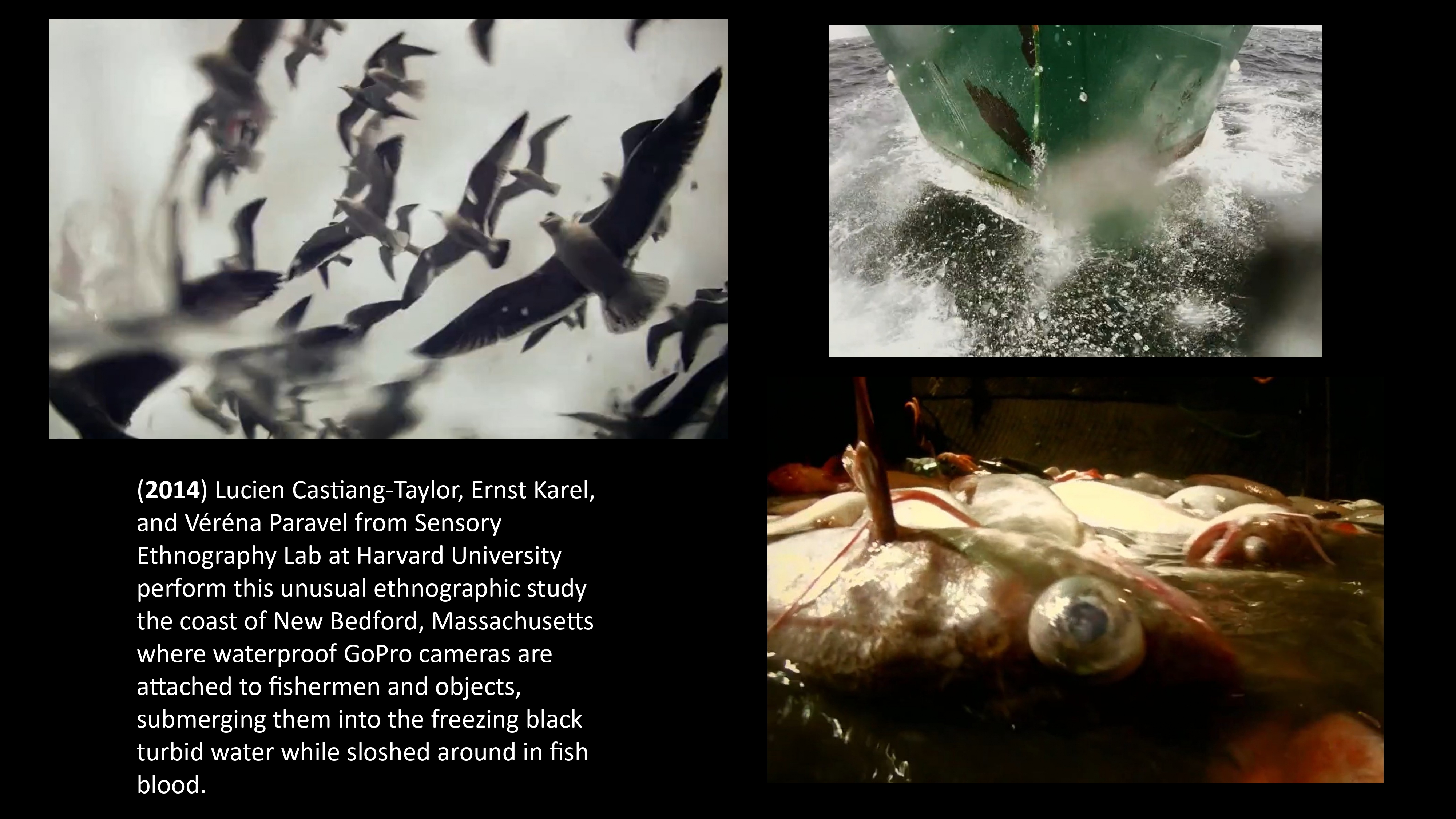

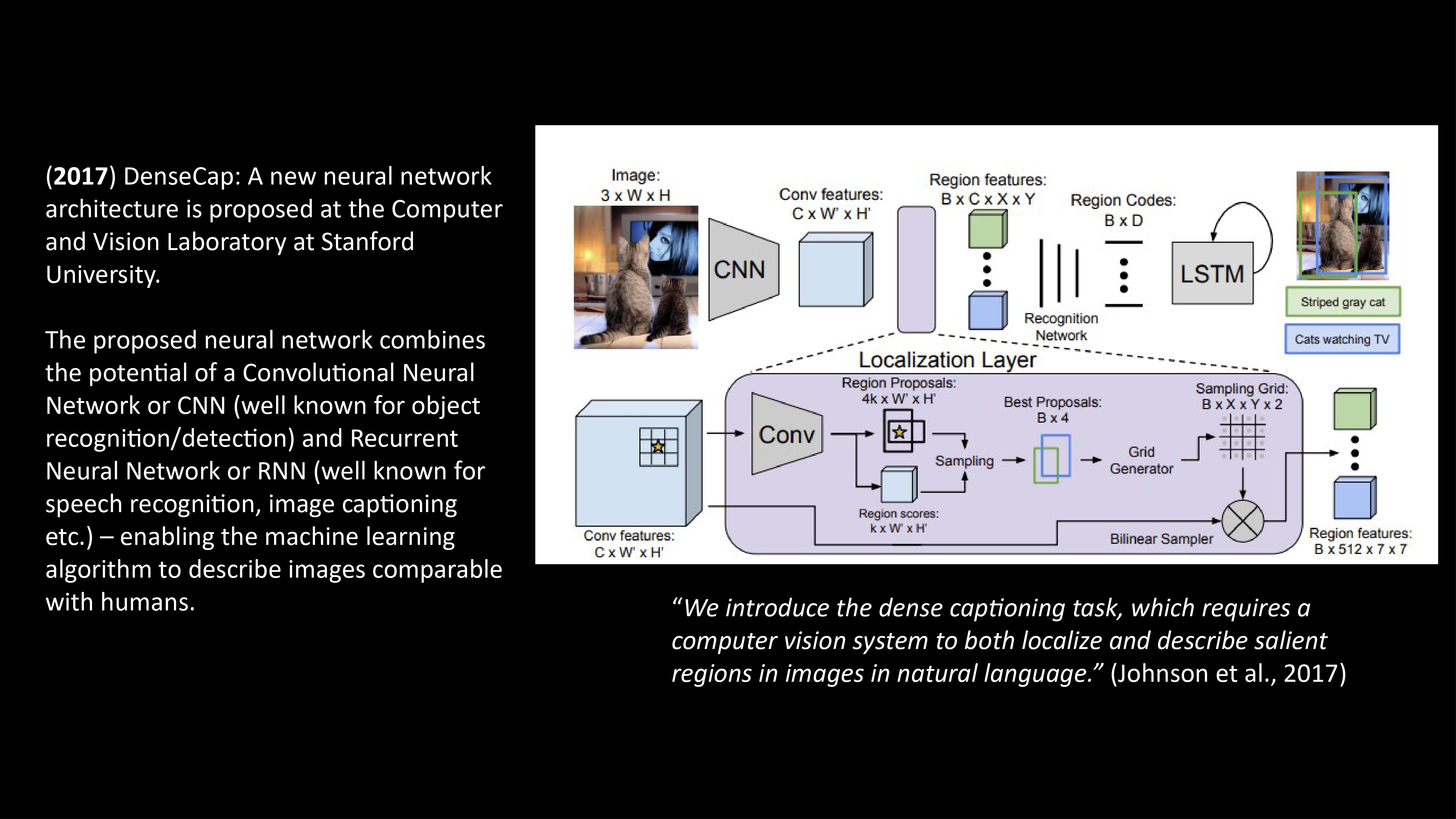

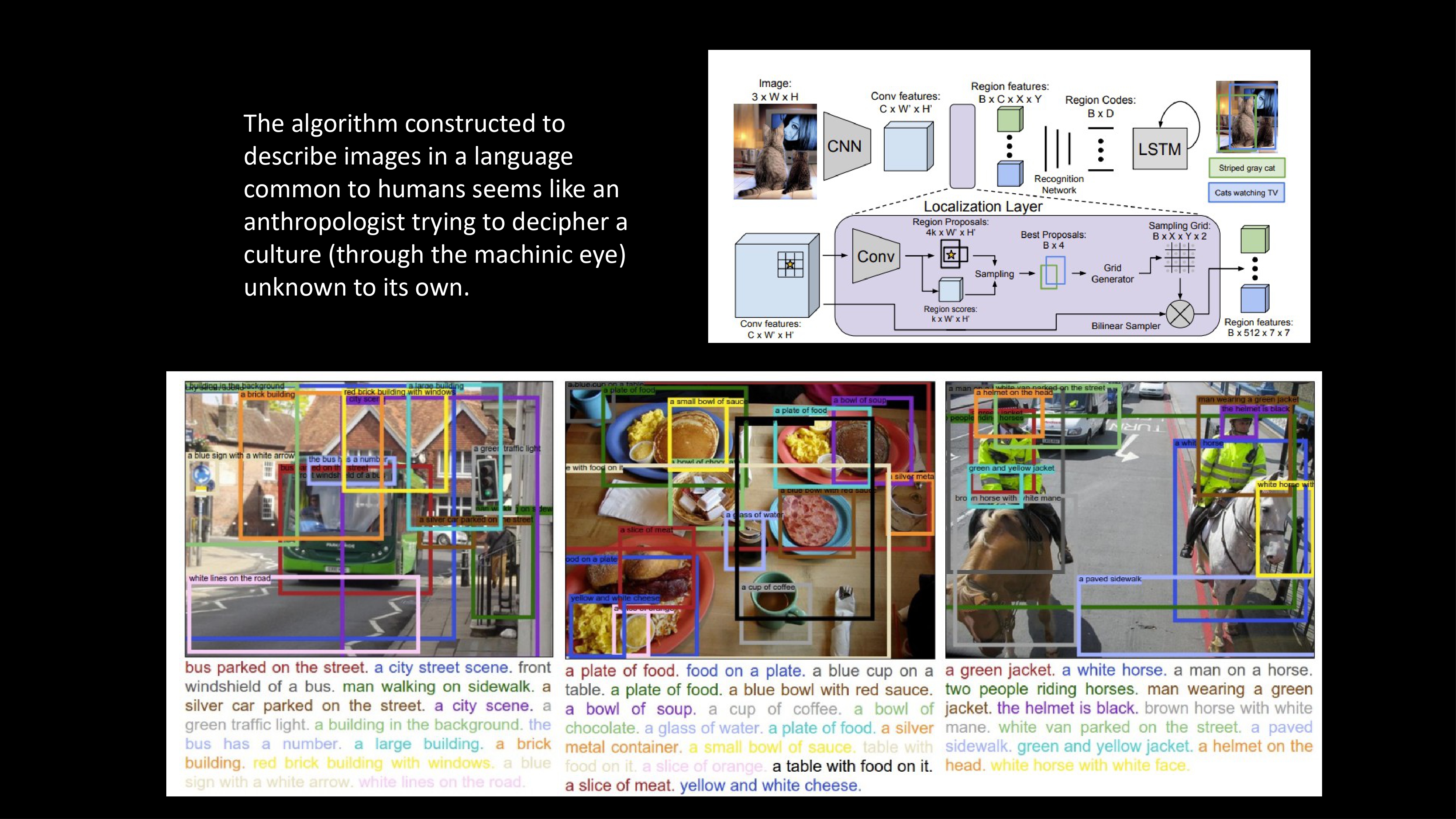

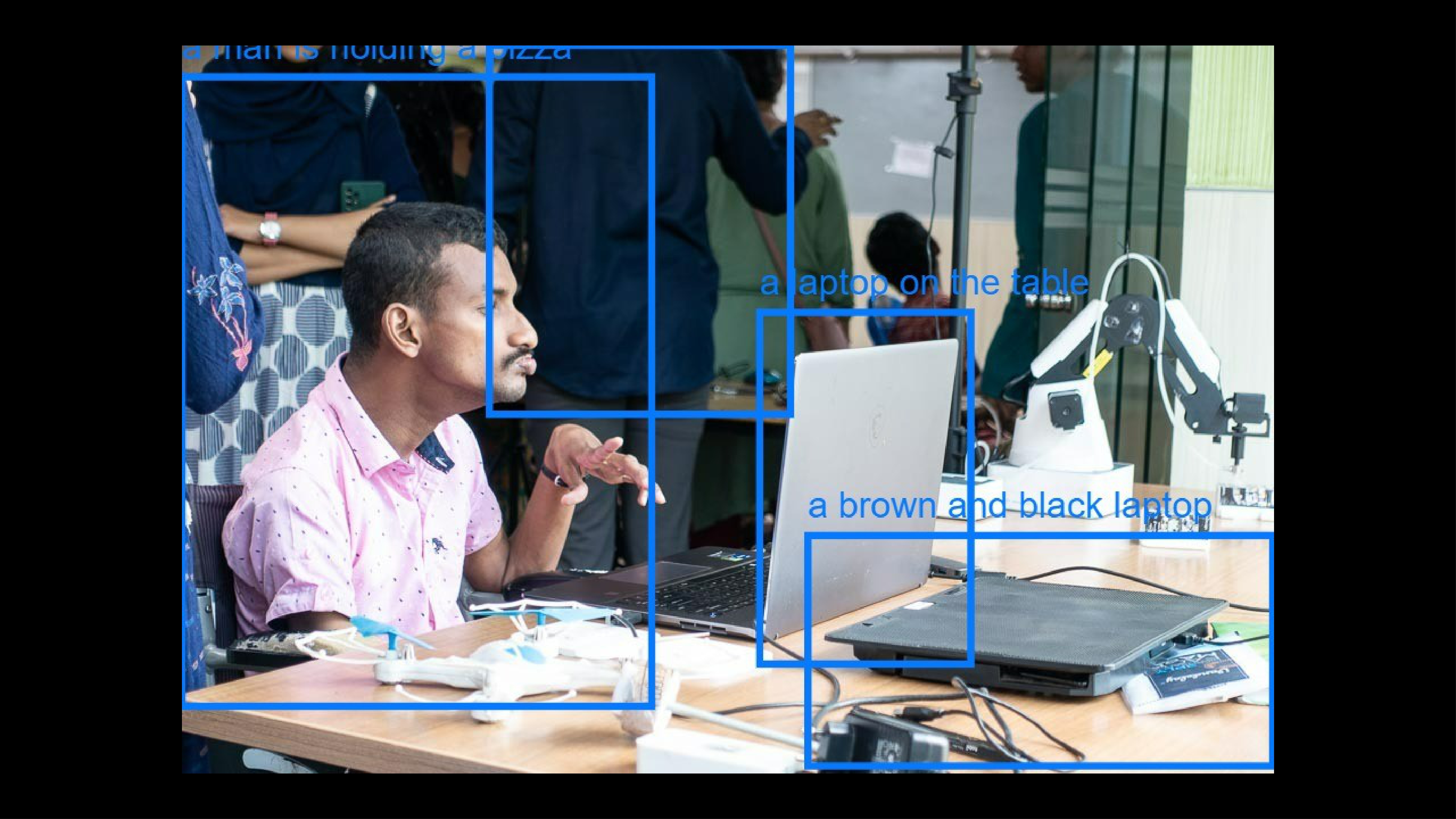

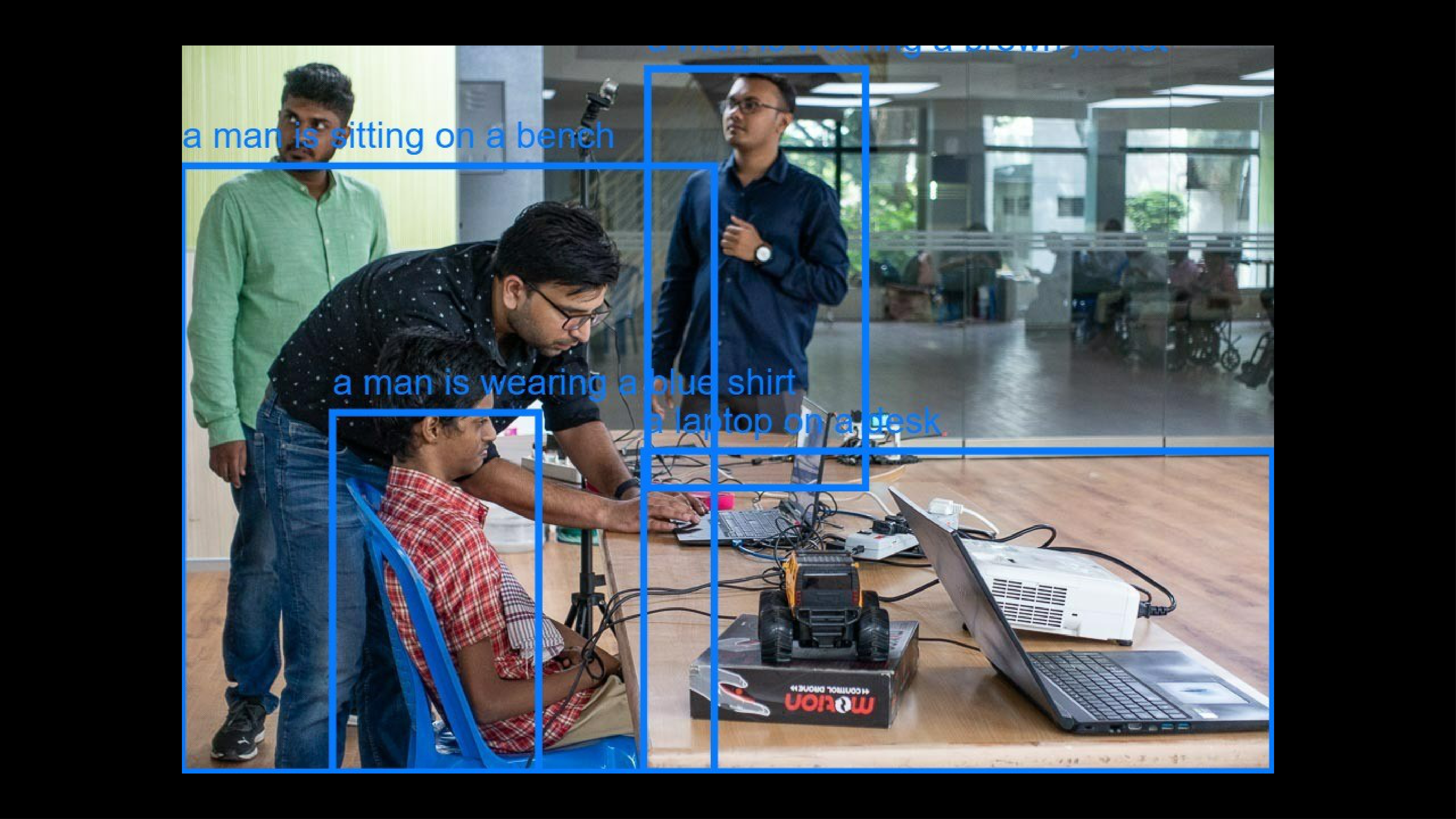

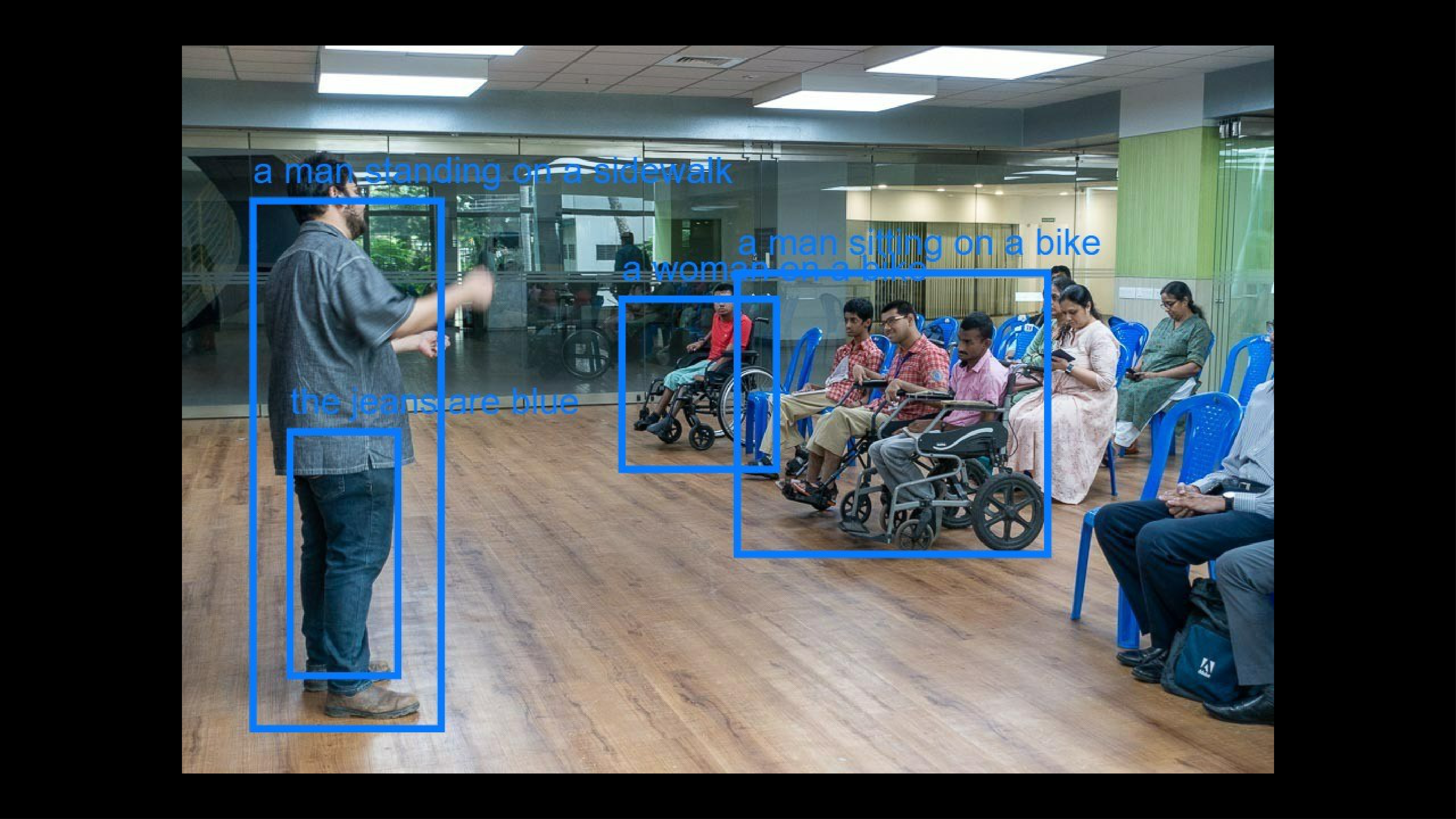

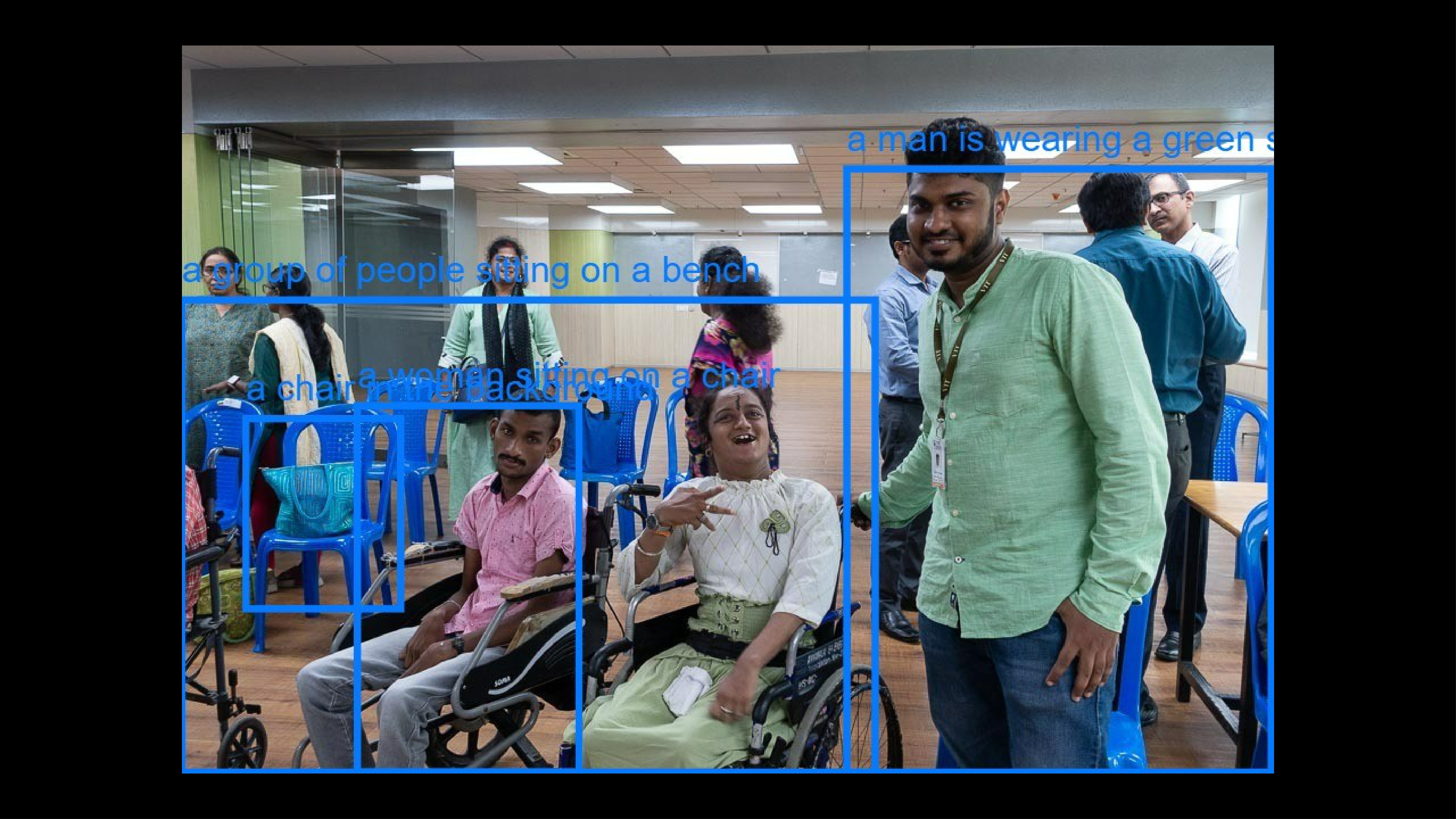

While computer scientists have developed sophisticated image-to-text computer vision algorithms (algorithms that describe images through text using machine learning), most of these algorithms (Johnson, 2012) (Ren, 2015) are still perceived and expected to use the same knowledge conventions of describing images comparable with humans. In cases, when they don’t describe images similar to the perceptions of the humans, the algorithms are considered as less accurate or having poor “generalization” capabilities. However, through this research work, it is posited that image-to-text computer vision algorithms (even with less accuracy) showcase a different trajectory of epistemological intervention to understand cultures - an ethnographic technique also practiced by anthropologists to understand and analyze cultures unknown to their own. This work thus presents an unusual ethnographic study performed by a human and a machine learning algorithm using an interactive web based interface. While the ethnographer uses his prior embodied knowledge of living in the world to intervene in a different culture (a maker space culture) and logs his findings on the interface, the computer vision machine learning algorithm in parallel uses the knowledge gained from the Visual Genome Dataset (Krishna et al., 2017) that it has been trained on and adds to the database - merging an analog human and a digital machine to co-create new forms of epistemologies.

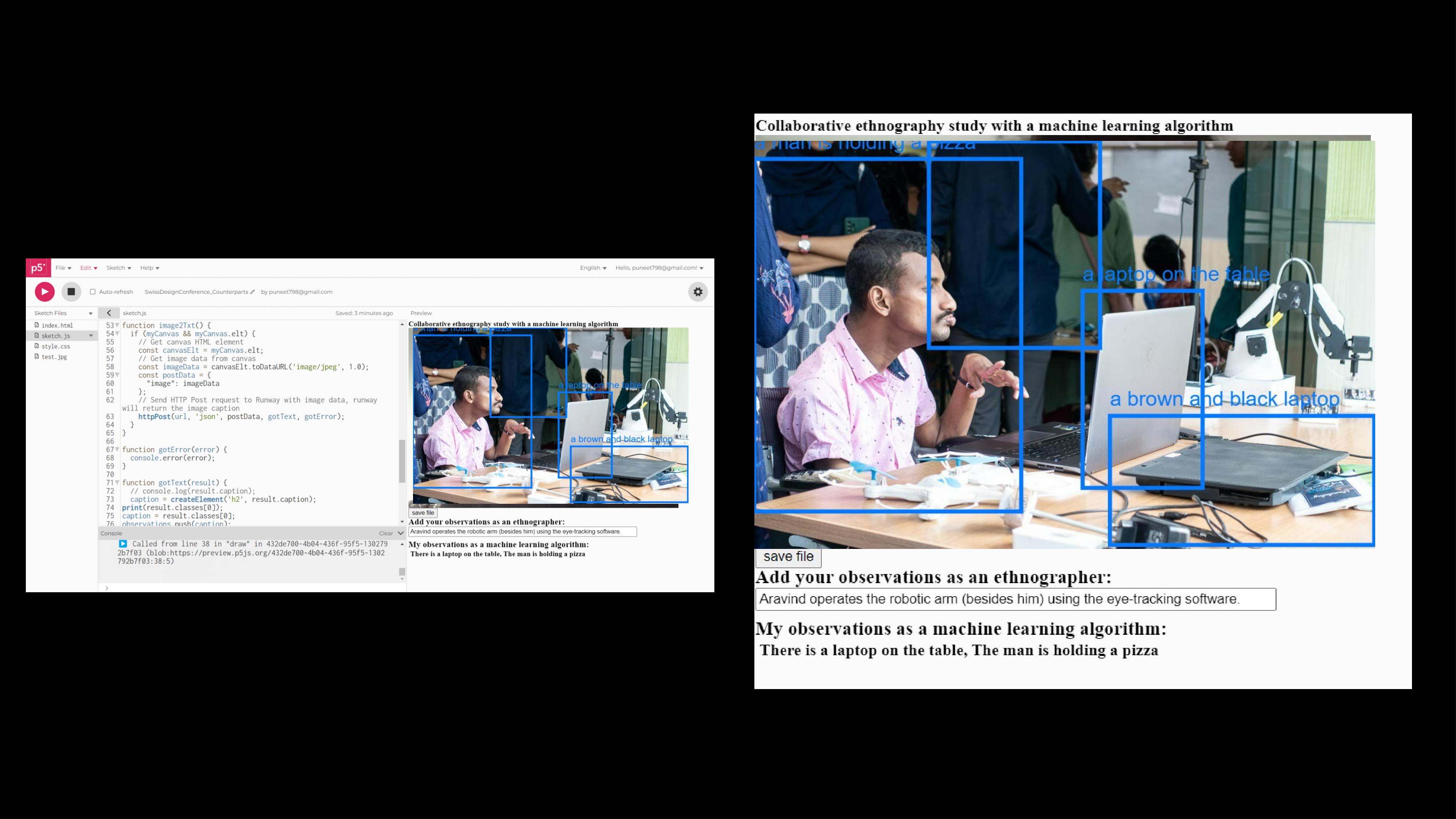

example video of the first prototype of the interface

Methodology

A visual ethnographic approach (Collier, 1986) is used by the human ethnographer to observe and describe the culture around him by typing text on the web-based interface developed using p5 javascript library. The interface also uses the RunwayML’s (https://runwayml.com/) pre-trained machine learning model, DenseCap, that captions images in real time with the help of a handheld camera by the ethnographer and logs the results into the data-base while displaying it on the screen. Findings The following observations were made during the first trial run of the interface:

- The observations made by the human ethnographer were made with patience as compared to findings by the machine learning algorithm that logged 10-20 observations per minute.

- While the human never repeated the observations once added to the database, the machine learning algorithm often repeated the same findings.

- The final database was a juxtaposition of human ethnographer and machine learning algorithm observations.

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]

[]