Waiting for Hands

Waiting for Hands is a work-in-progress XR performance (co-created with Yesica Duarte and Hema Kumari) during Bodies-Machines-Publics residency at Khoj Studios in 2024. Virtual Reality (VR) is often hailed as the “ultimate empathy machine,” framing disability as an experience to be simulated through such technologies—reducing disability to a spectacle of pity or inspiration. If that is the case, if empathy is the knife, we question: what ethical stance an XR performance can take to attune participants to a non-normative body schema while resisting spectacle?

Crip Sensorama: Christian's coffee

This is the second version of Crip Sensorama titled, Crip Sensorama:Christian’s Coffee exhibited at Ars Electronica Festival for Art, Culture and Society in Linz, Austria. This version has been possible with care and support of my friends and collaborators Yesica Duarte, Christian Bayerlein and Eric Desrosiers. Christian and his coffee Christian Bayerlein is an artist, technologist, and activist who has Spinal Muscular Atrophy (SMA) due to which he has almost no movements in the upper and lower parts of his body.

Crip Sensorama: Eric's Paintings

This is the first version of Crip Sensorama, titled Crip Sensorama: Eric’s Paintings was developed with immense support of my friend and collaborator Eric Desrosiers. Initiated during the FOUNDING LAB Fall term in between Sep, 2023 - Jan, 2024, is a 10-12 minutes interactive XR art work, exihibited at xCoAx conference, in Treviso, Italy in July 2024. Eric and his paintings Eric is a painter in his mid 50’s who has muscular dystrophy, a degenerative neuromuscular disease.

Re-imagining XR with people with sensorimotor disabilities through criptastic hacking

"The Future is Disabled" - Leah Laksmi Piepzna-Samarasinha. This a post about the making of a series of interventions, that me and my collaborators and friends call ‘Crip Sensorama’. Crip Sensorama(s) are interactive multi-sensory VR/AR work that investigates how the ableist technologies of XR (eXtended Reality) could be re-imagined and hacked (in their early stages) to act as a platform of storytelling for (and with) people with disabilities – shaping their own future imaginaries by opening a portal into disability culture and living.

Dwell Time (VR/AR)

Drawing on my close interventions with people with disabilities as a non-disabled Human-Computer Interaction researcher, building assistive technologies with (and for) disabled people, this work is a VR/AR experience of a self-reflection (a scrapbook) on my morphing artistic and scientific practice. [photo credits: Agustina Isidori, in picture: Lynn Hughes] [photo credits: Agustina Isidori, in picture: Rochana Fardon] In the field of Human-Computer Interaction and eye-tracking studies, 'Dwell Time' is the time one has to gaze on a key on a screen to activate it.

Navigating in VR using free-hand gestures and embodied controllers (ACM VRST 2023)

This is a short paper published at the ACM Virtual Reality and Software Technology Conference 2023, held at Christchurch, New Zealand. Working on my PhD research, exploring how can technologies of XR (eXtended Reality) can be made accessible for people with sensorimotor disabilities, I am always aware that the hand-held controllers that are assumed as entry points of navigation in virtual spaces (for able-bodied folks) act as the hindering points for people with bodily disabilities to access VR/AR technologies.

Animate (an eXtended Reality [XR] Theatre)

Animate is a theatrical VR/AR (virtual and augmented reality) artwork on the theme of dystopian climate crisis, set in the year 2050. Animate was recently exhibited at KunstFest in Weimar, Germany (August 2022) and PAD Festival, Darmstadt, Germany (October 2022). The project was led by Canadian-American Media Artist Chris Salter. Animate Trailer

Disability Hackathon

Motivated by the concept of criphackathon from disability-led activism, I along with my friends (from Indian Insitute of Science, Bangalore) conducted a disability hackathon at Indian Insitute of Technology, Chennai in October 2022.

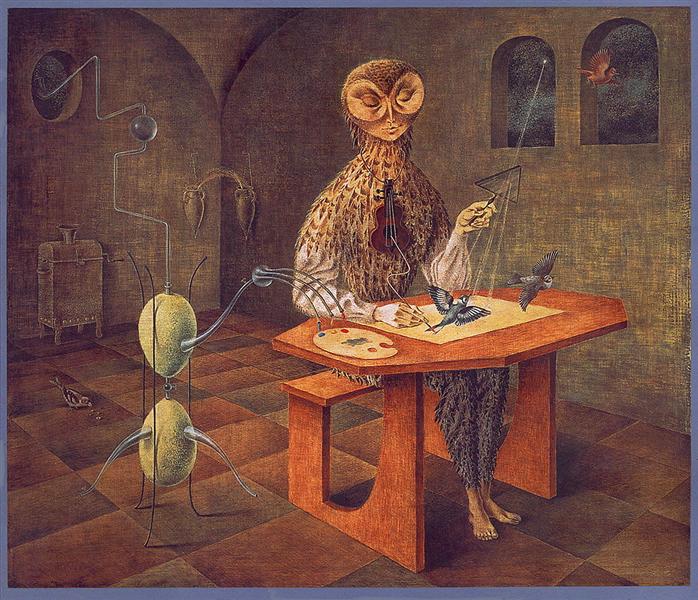

Creation of Birds

[Promo Video of the work, Kaldi Moss] ‘Creation of Birds’ draws its inspiration from German Biologist Jakob Johann von Uexküll’s concept of “umwelt” - questioning how can we design interfaces that enable us to adapt to uncommon sensory spectrums. By inviting the human to playfully engage with a “sensing” algorithm and a bird in the sky, this work (a web-based application) investigates our relationships with more than human scales of perception.

Epistemological Intervention: Collaborative Ethnography between a human and a machine-learning algorithm

Epistemological Intervention (EI) is a research-creation work that situates itself at the intersection of human-computer interaction, anthropology, and artificial intelligence research - asking the following research question: How can we design interfaces that bring together the human and the non-human to create new forms of epistemologies? EI thus brings in collaboration, a human and a non-human (a machine learning algorithm) to search for new ethnographic methodologies of knowing the world, what Anthropologist Dara Culhane also refers to as “co-creative” knowledge making.

Can we talk?

"If you touch a single banana with your two hands, you feel one banana, not two. Thus, the images of the two eyes must be “fused” somewhere in the brain to give rise to a unitary item of perception, or percept. But we can ask the questions, What if the eyes look at completely dissimilar things? Would we perceive a blend?" - VILAYANUR S. RAMACHANDRAN and DIANE ROGERS-RAMACHANDRAN (When the Two Eyes Clash, 2007).

AutonomX

AutonomX is an open source desktop application that allows multimedia artists and students to easily and quickly experiment with life-like processes via a graphical interface to generate dynamic, emergent and self-organizing patterns and output these patterns via OSC and MIDI to control light, sound, video, or even robots in real time, unlike systems that are fixed such as timelines and cue systems. AutonomX is a project incubated at xModal Lab at Milieux Institute for Arts, Culture, and Technology, Concordia, University, Canada.

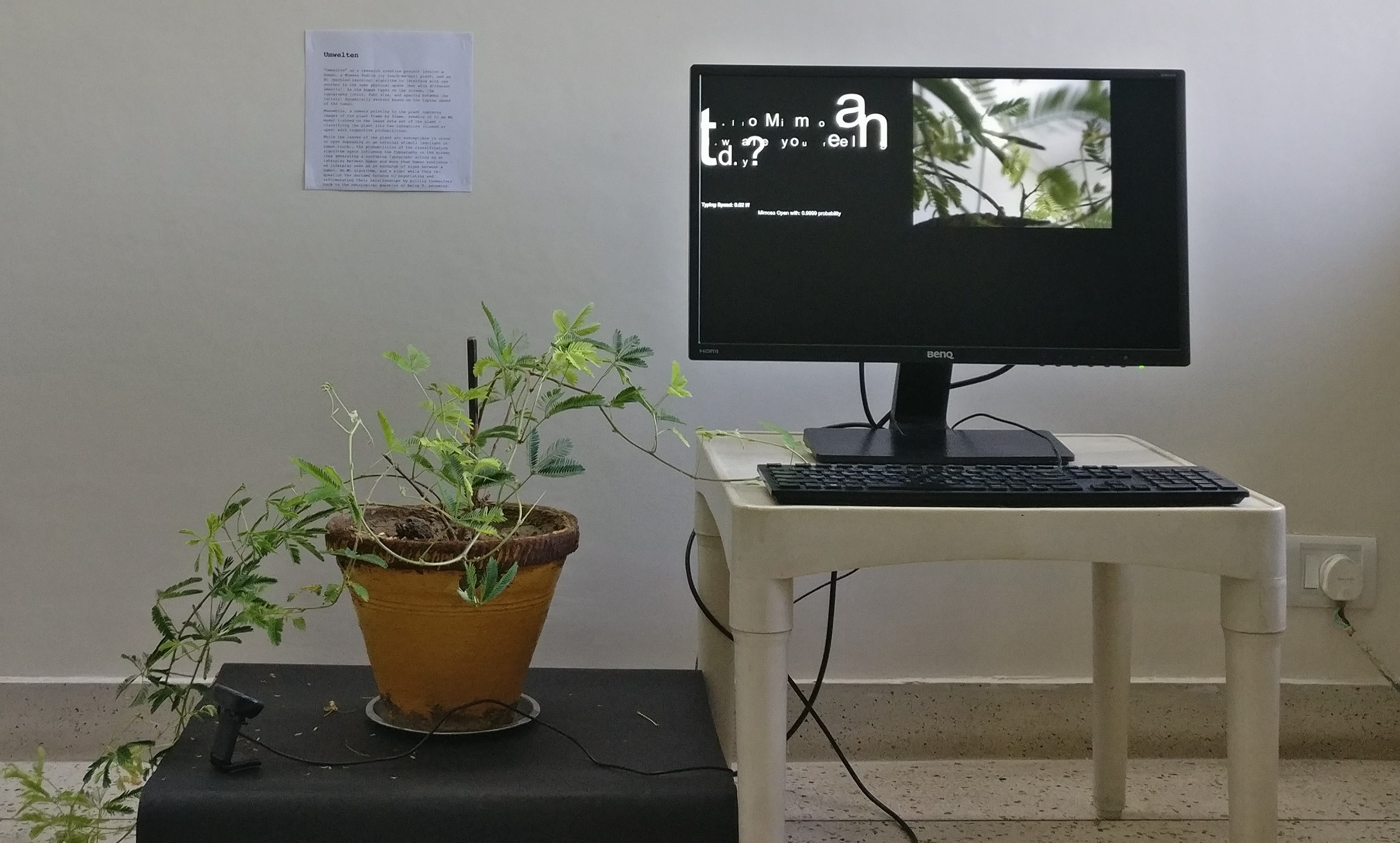

Umwelten

[photo credits: Puneet Jain] Umwelten is an interactive installation that initiates a collaboration between a human, a non-human (a touch-me-not plant/Mimosa Pudica), and a machine-learning (ML) algorithm depicting a playful circulation of agency among the three entities over time. This work borrows the concept of ‘umwelt’ (environment) coined by the German biologist Jacob Johann Von Uexküll , inviting the human, the plant, and the ML algorithm to interact with one another in the same physical space but with different sensory envelopes (or umwelten).

Ethnographic Study - people with visual impairment

This is my ethnographic study with people who are visually impaired. Before begining the text, I want to confess that I am using the word ethnography in a loose sense here. While my timely visits to National Association for the Blind (NAB), Bangalore and Captain Chand Lal school (a school for children with visual impairment) in Delhi, making notes on their daily activities, conversations with their trainers, studying the tools used by them (e.

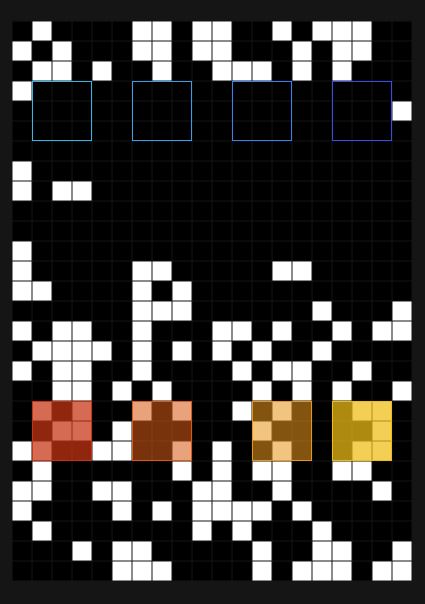

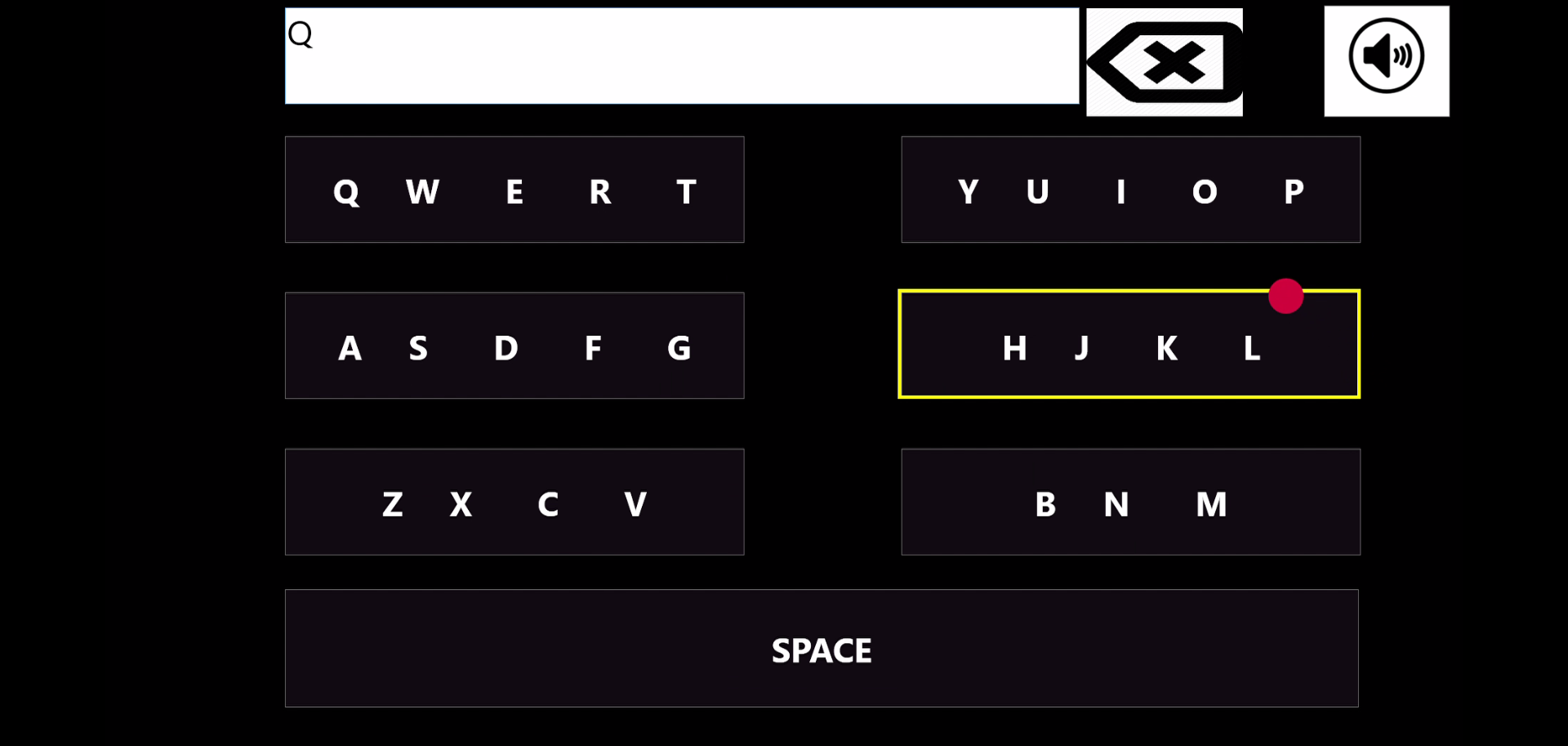

Adaptive Gaze Controlled Virtual Keyboard for people with sensorimotor disabilities

The common input modalities used by people for interacting with a computer are limited to a mouse or a physical keyboard - mostly operated using hands. However, these devices seem futile when the users are motor-impaired or have constrained body movements. The following project is a virtual keyboard that is operated through eye-gaze rather than hands. The keyboard has a modified QWERTY layout and is integrated with a customized machine learning algorithm to dynamically adapt dwell time of the keys.

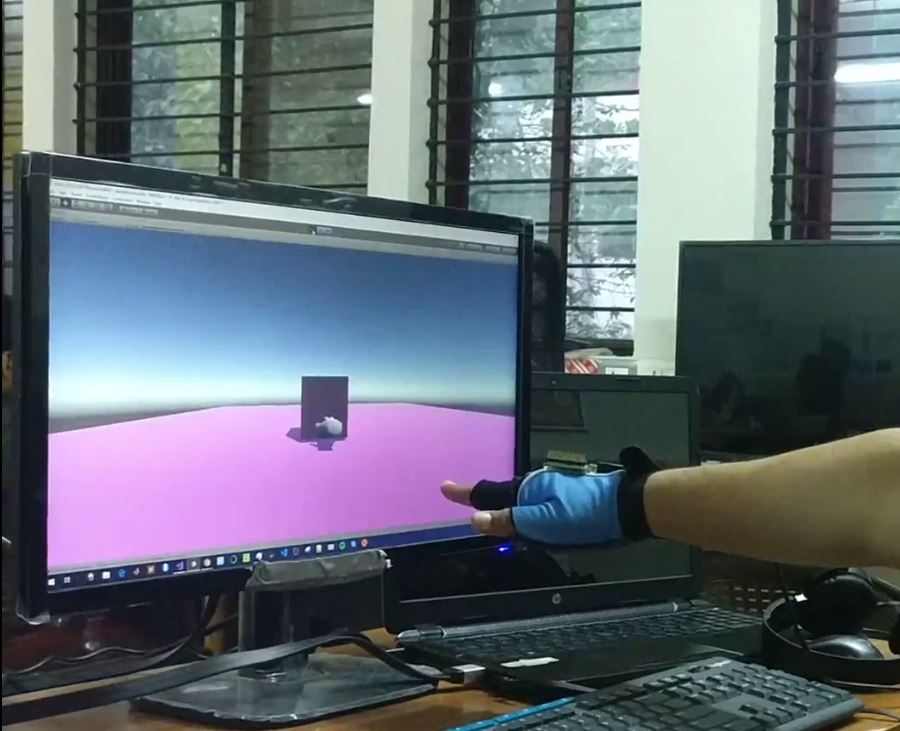

Inertial Motion Sensing and Haptics in UNITY3D

This was a small attempt to understand how wearable gloves work and what are the various mediums by which one can integrate haptics into the device. The particular glove I used is ‘Capto Glove’ purchased from the Company of the same name. Currently, the glove consists of an IMU (Intertial Measurement Unit) that provides accelerometer, magnetometer and gyroscopic readings along the three axes. The glove has many other features like bending force calculation, finger pressure readings, etc.

Cybernetic Plant Human Interface

This project explores a proof of concept to open up a dialogue between a human and a non-human (a Mimosa Plant) in a digital space where they co-exist together, share the digital space, and compete for their respective goals to interact with each other resulting in a cybernetic performance over time. Introduction The intake of digital information till date is heavily based on rectangular computer screens and demand an acute visual focus (which is also overwhelmingly visual from a cultural/social sensory point of view) of the observer.

Solar Energy Recommendation Tool (NASA SpaceApp Challenge 2017)

Helios is a tool that won a global nominee at NASA Space App Challenge 2017. Helios helps people understand the amount of energy generated from different types of Solar panels and how they can relate it to daily household items.Helios also helps the Hi-Seas crew to plan their daily activities by giving personalized recommendations. The Problem In the past decade we have seen a great inclination towards use of solar energy around the globe.

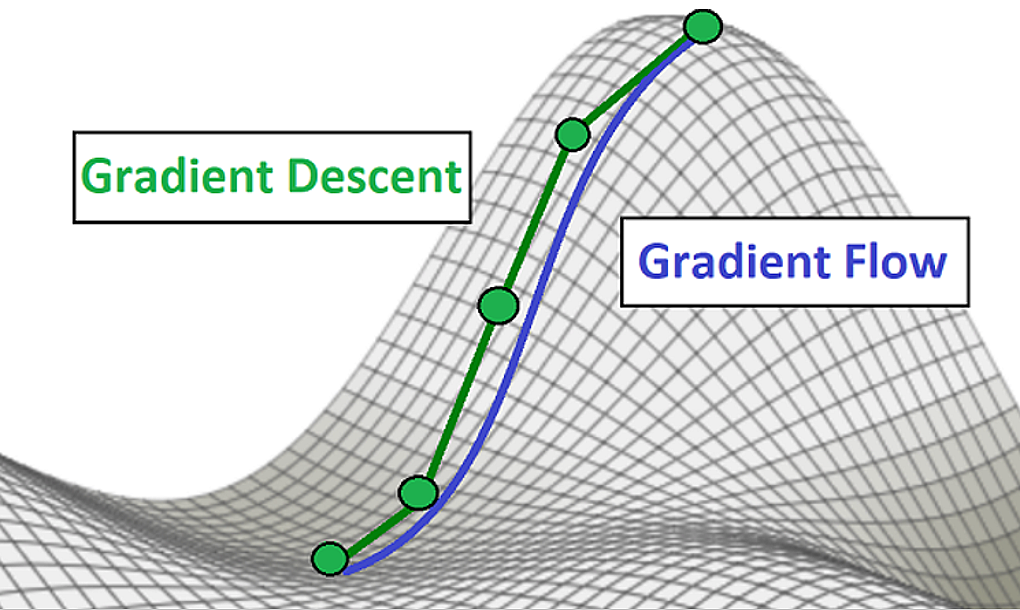

Genealogy of Neural Networks - A short essay

Medical prescriptions from a chat bot on a website, songs recommended by a cylinder in a dining room, and cars on a highway driving without human assistance portrays a world surrounded by “intelligent” entities - intelligence that is equivalent to that of a human brain. Indeed, all the above technologies are equipped with a common architecture, a neural network that: imitates the human brain to learn patterns in datasets, optimize its decisions, and predict results to act on the outside world.

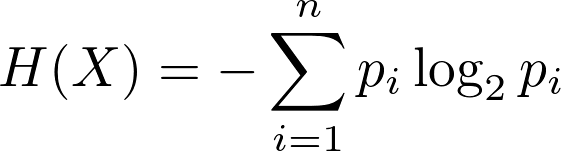

Intertwinement of Entropy, Information, and Thermodynamics - A short essay

In the mid-20th century, the concept of communication that was mostly tied to a source and a physical medium was challenged and ontologically shifted by a mathematician named Claude Shannon by the construction of a grand unified theory of communication. Shannon achieved this extraordinary goal by quantifying the information associated with a message, the capacity of a communication channel through which this message passes, the coding processes needed to make that channel efficient, study of noise that could arise in such a system, and noise’s effect on the accuracy of the received message by using a single concept from the physical sciences - entropy.